Hi Garuda folks,

[not sure if this is an issue or feedback with feature request, therefore I flagged the latter.]

I am using Garuda for some time now but updating still is the most inconvenient and dangerous thing for me, usually requiring manual memory checking everytime and memory cleaning if applicable, and I am not happy with the frequent manual effort.

(Details: My root partition is unfortunately limited to 48GB at the moment. My cleaning process is to clear all of the pacman cache using sudo pacman -Scc (because this simply works, maybe “clear package cache” in Garuda Assistant would help too) and half to 3 quarters of old snapshots, which, in total, frees half of 46GB of used memory in the root partition.)

In my Dra6onized Plasma KDE version, updates do not abort when there is insufficient memory in my root partition for the whole update, e.g. if I accidentally trigger a full update instead of only updating one package. Then the failing update process simply breaks my system, silently! The kernel binaries vanished as well as other updated packages. (This is a fact, not an allegation.) Fortunately I am prepared, I actually need to restore a snapshot. I am using the Btrfs Assistant. But this trouble seems quite unnecessary and hurting usability for normal people I guess.

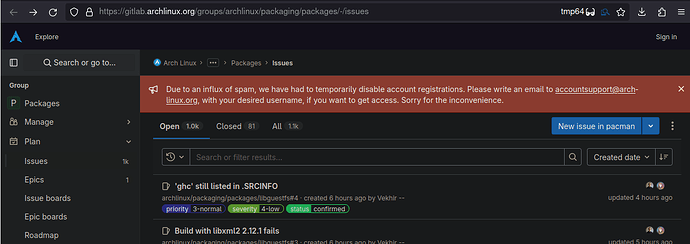

Old arch post describing the same problem.

Would it be possible to have a simple option or feature for garuda-update, that the update is aborted beforehand, if the worst case amount of memory requirements does not fit into the partition with a certain margin? It would help very much.

Or does this option exists already?

It would also be very cool, if it could directly offer the choice of clearing the package cache and retrying before actually the abortion as a last resort.

[Annoying question: is there really no alternative to this kind of fragile big bang integration of a big update?]

Just saying, because everything works great for me in Garuda, all software is cutting edge, and there is a rolling release, but this is the only issue bothering me for most of the time I am using Garuda.

Thank you for your understanding.

Regards