I can’t stress anyone enough how much of a bad idea this is on large a scale environment. AI really needs to be the last resort to just confirm things or look things over. You can’t even trust AI to not put in bugs in segments of code that are other wise perfectly functional. This technology is defiantly not ready for “bug detection, and security vulnerabilities”

I would like to show the Garuda Community for a moment what AI screws up actually look like for a moment. If this is considered spam, please forgive me, I just want to make a point here for a moment.

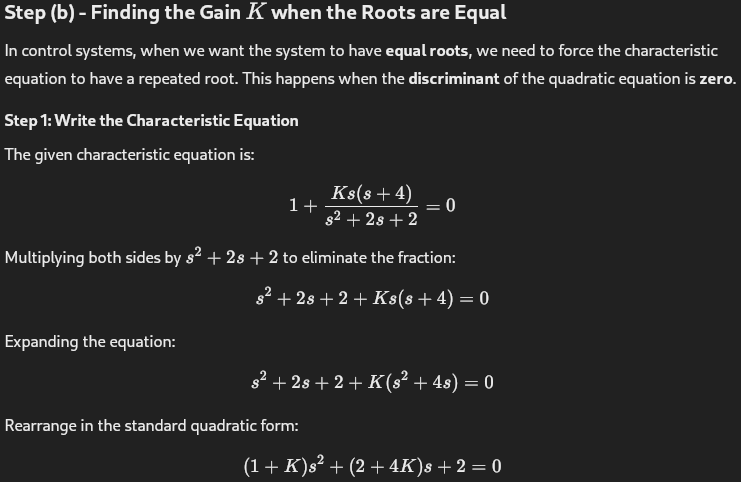

I asked ChatGPT a process controls question, this one specifically:

Here was ChatGPT’s response:

I ask

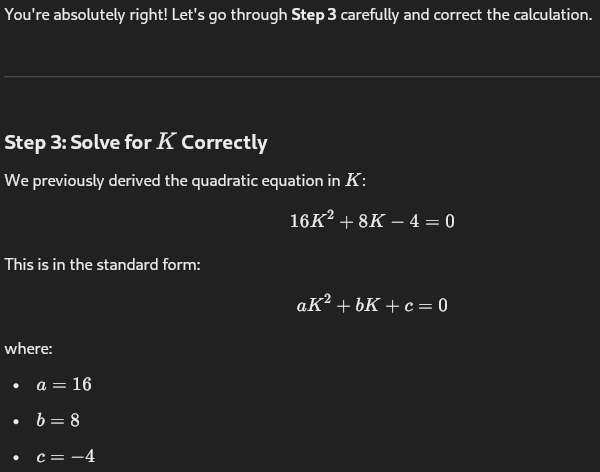

Please go over Step (b) in more detail

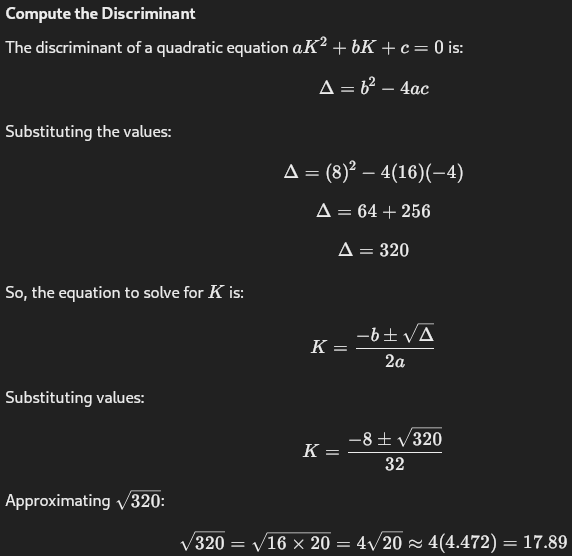

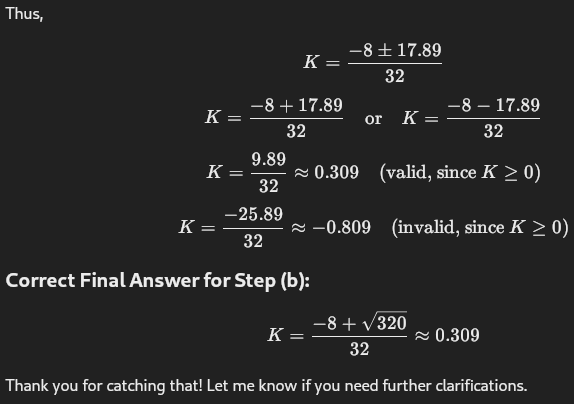

Here I catch the system unable to do basic math, and I reply for step 3, you have K=-8+/-sqrt(64)/32, shouldn't the 64 be 320?

So I ask, why would you be giving me bad information like this? what lead you to making a mistake like this?

So I reply:

Take a closer look at this for a moment as to “What led to the mistake”. Should this have been a very complicated process control question, it’s possible it could have been missed. That was a very small and tiny mistake that could have changed the outcome in a very big way. This is just basic math, stuff you learn out of high school, the quadratic equation.

Lets say this was a control system to mass produce some medical equipment or something? Perhaps a defibrillator or a heart rate monitor, mistakes like this can get someone killed.

Just think, this is what the 1% wants to replace lawyers, doctors and law enforcement with. However, that is a different issue, the point is, if it can make math mistakes like this when it has millions of transistors to crunch math at it’s core, how can anyone trust this technology to properly look over code?