I take issue with that article, and many more like that:

First, the database issue, Wiz reported it and was fixed immediately (no data was harvested).

But now the serious one:

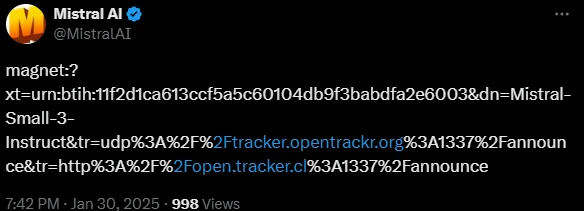

https://www.reddit.com/r/LocalLLaMA/comments/1i8ifxd/ollama_is_confusing_people_by_pretending_that_the/

for those that do not want to click on reddit:

Ollama is confusing people by pretending that the little distillation models are “R1”

I was baffled at the number of people who seem to think they’re using “R1” when they’re actually running a Qwen or Llama finetune, until I saw a screenshot of the Ollama interface earlier. Ollama is misleadingly pretending in their UI and command line that “R1” is a series of differently-sized models and that distillations are just smaller sizes of “R1”. Rather than what they actually are which is some quasi-related experimental finetunes of other models that Deepseek happened to release at the same time.

It’s not just annoying, it seems to be doing reputational damage to Deepseek as well, because a lot of low information Ollama users are using a shitty 1.5B model, noticing that it sucks (because it’s 1.5B), and saying “wow I don’t see why people are saying R1 is so good, this is terrible”. Plus there’s misleading social media influencer content like “I got R1 running on my phone!” (no, you got a Qwen-1.5B finetune running on your phone).

There is no “DeepSeek R1” of 1.5B. DeepSeek V3 itself is something like 640B !!!

Also in the article:

ollama run deepseek-r1:1.5b

This is just asking for trouble, 1.5B models are … just for hallucinations (or really easy things). At least the 7B Qwen or 8B LLama distill.

So no, there is no DeepSeek R1 or V3 anyone but super computers at more than 5 tokens per second can run.

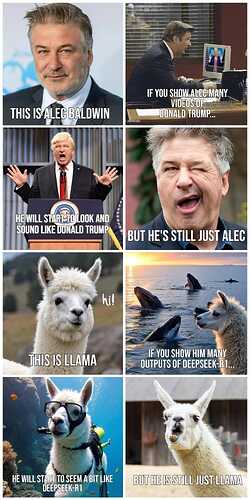

To make a comparison, it is like an article saying that if you install MSDOS, you are running Linux!

EDIT: In Image:

![]() (unfortunately for me, only 8GB vram, when I tested, it was too slow, less than 10 tokens per second is where I draw the line)

(unfortunately for me, only 8GB vram, when I tested, it was too slow, less than 10 tokens per second is where I draw the line)