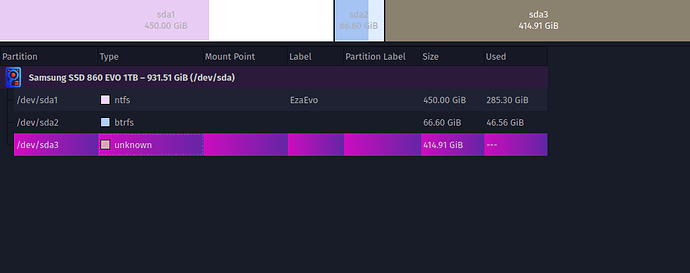

NOPE.

Another fail.

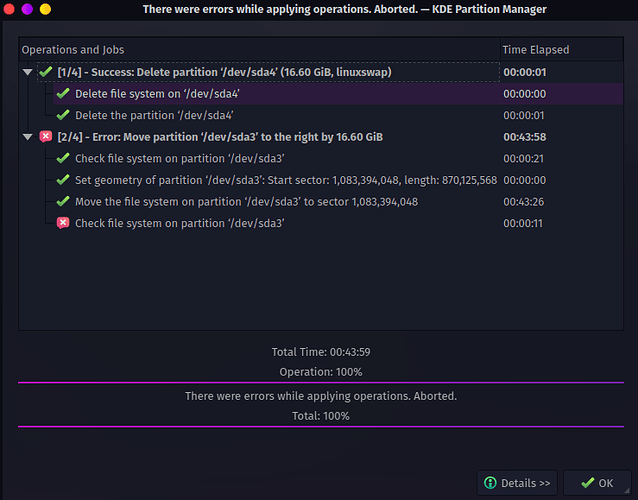

Delete partition ‘/dev/sda4’ (16.60 GiB, linuxswap)

Job: Delete file system on ‘/dev/sda4’

Command: wipefs --all /dev/sda4

Delete file system on ‘/dev/sda4’: Success

Job: Delete the partition ‘/dev/sda4’

Command: sfdisk --force --delete /dev/sda 4

Delete the partition ‘/dev/sda4’: Success

Delete partition ‘/dev/sda4’ (16.60 GiB, linuxswap): Success

Move partition ‘/dev/sda3’ to the right by 16.60 GiB

Job: Check file system on partition ‘/dev/sda3’

Command: btrfs check --repair /dev/sda3

Check file system on partition ‘/dev/sda3’: Success

Job: Set geometry of partition ‘/dev/sda3’: Start sector: 1,083,394,048, length: 870,125,568

Command: sfdisk --force /dev/sda -N 3

Set geometry of partition ‘/dev/sda3’: Start sector: 1,083,394,048, length: 870,125,568: Success

Job: Move the file system on partition ‘/dev/sda3’ to sector 1,083,394,048

Copying 42,486 chunks (445,504,290,816 bytes) from 554,697,752,576 to 554,697,752,576, direction: left.

Copying 170 MiB/second, estimated time left: 00:39:47

Copying 164 MiB/second, estimated time left: 00:38:47

Copying 156 MiB/second, estimated time left: 00:38:32

Copying 151 MiB/second, estimated time left: 00:37:26

Copying 149 MiB/second, estimated time left: 00:35:30

Copying 148 MiB/second, estimated time left: 00:33:26

Copying 147 MiB/second, estimated time left: 00:31:09

Copying 148 MiB/second, estimated time left: 00:28:37

Copying 147 MiB/second, estimated time left: 00:26:21

Copying 150 MiB/second, estimated time left: 00:23:33

Copying 152 MiB/second, estimated time left: 00:20:51

Copying 154 MiB/second, estimated time left: 00:18:17

Copying 156 MiB/second, estimated time left: 00:15:49

Copying 158 MiB/second, estimated time left: 00:13:25

Copying 159 MiB/second, estimated time left: 00:11:05

Copying 160 MiB/second, estimated time left: 00:08:48

Copying 161 MiB/second, estimated time left: 00:06:33

Copying 162 MiB/second, estimated time left: 00:04:21

Copying 163 MiB/second, estimated time left: 00:02:09

Copying 163 MiB/second, estimated time left: 00:00:00

Copying remainder of chunk size 6,291,456 from 1,000,195,751,936 to 1,000,195,751,936.

Copying 42,486 chunks (445,504,290,816 bytes) finished.

Closing device. This may take a few seconds.

Move the file system on partition ‘/dev/sda3’ to sector 1,083,394,048: Success

Job: Check file system on partition ‘/dev/sda3’

Command: btrfs check --repair /dev/sda3

Check file system on partition ‘/dev/sda3’: Error

Checking partition ‘/dev/sda3’ after resize/move failed.

Move partition ‘/dev/sda3’ to the right by 16.60 GiB: Error

I think maybe I’m done this time.

![]() ).

).